Research

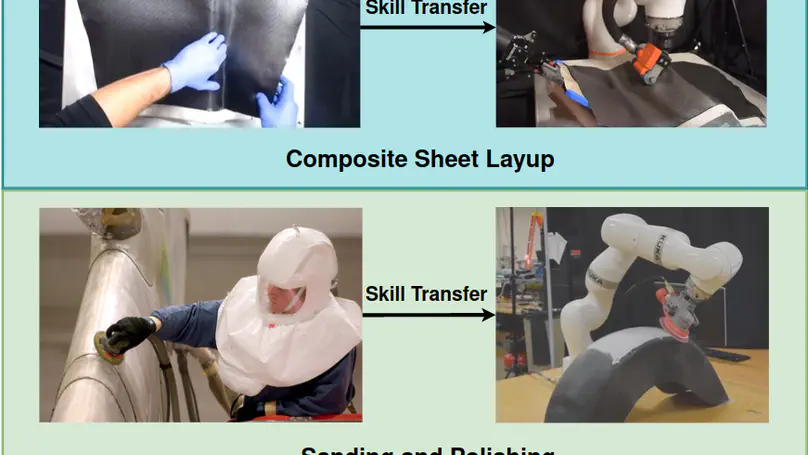

Humans perform several dynamic tasks with ease by learning a high-level decision-making policy. Whenever a new scenario is encountered decisions are made based on this policy rather than complete re-learning. One of the key goals of this research is to enable robots to learn from humans in a way that is intuitive and natural so that they can be more easily integrated into a wide range of tasks and environments.

Synthetic image data for segmentation tasks has proved beneficial in scenarios with data scarcity. However, most of these methods fail to capture the physics of the components involved, thus generating unrealistic image data. With the aid of physics simulators, we explore different avenues for generating physics-informed synthetic images.

Compliant or deformable objects are challenging to manipulate due to their inherent physical properties. However, the majority of the objects humans interact with are deformable. This work focuses on enabling robots to handle such objects with greater efficiency and flexibility and to be able to adapt to changes in the objects or their surroundings.